At work, I started building a .net assembly that would probably find its way into a number of the server processes and applications around the shop. This particular assembly was going to end up containing quite a number of external open source references that I didn’t want to expose to the consumer of my library.

I set out to solve several simple requirements.

- Easy to use. Should be nothing more than adding a reference to the assembly (and use it).

- Consumer should not have to deal with the 5 open source libraries it was dependent on. Those are an implementation detail and it’s not necessary to expose those assemblies to the consumer, let alone have to manage the assembly files.

I originally got the idea from Dru Sellers’ post http://codebetter.com/blogs/dru.sellers/archive/2010/07/29/ilmerge-to-the-rescue.aspx

I gave ILMerge a try. As a post build event on the project – I ran ILMerge and generated a single assembly. Leveraging the internalize functionality of ILMerge so my assembly wouldn’t expose all of its open source projects through Visual Studio’s intellisense.

This almost gave me the output I wanted. Single assembly, compact, easy to use… Unfortunately, when I tried to use the assembly I started seeing .net serialization exceptions. Serialization from my ILMerged assembly could not be desterilized on the other end because that type was not in an ILMerged assembly, but in the original assembly. (Maybe there’s a way to work around this, but I didn’t have time to figure that out, would love to hear any comments)

So ILMerge appeared to be out, what next?

My coworker, Shawn, suggested I try storing the assemblies as resource files (embedded in my assembly). He uses the SmartAssembly product from Red Gate in his own projects, and mentioned that their product can merge all of your assemblies into a single executable – storing the assemblies in a .net resource file within your assembly/executable. This actually seemed easy to accomplish so I thought I’d give it a try.

How I did it.

Step 1: Add the required assemblies as a resource to your project. I choose the Resources.resx file path and added each assembly file to the Resources.resx. I like this because of how simple it is to get the items out.

Step 2: We need to hook up to the first point of execution (main(…), or in my case this was a library and I had a single static factory class, so in the static constructor of this factory I included the following lines of code.

static SomeFactory()

{

var resourcedAssembliesHash = new Dictionary<string, byte[]> {

{"log4net", Resources.log4net},

{"Microsoft.Practices.ServiceLocation", Resources.Microsoft_Practices_ServiceLocation},

};

AppDomain.CurrentDomain.AssemblyResolve += (sender, args) =>

{

// Get only the name from the fully qualified assembly name (prob a better way to do this EX: AssemblyName.GetAssemblyName(args.Name))

// EX: "log4net, Version=??????, Culture=??????, PublicKeyToken=??????, ProcessorArchitecture=??????" - should return "log4net"

var assemblyName = args.Name.Split(',').First();

if (resourcedAssembliesHash.ContainsKey(assemblyName))

{

return Assembly.Load(resourcedAssembliesHash[assemblyName]);

}

return null;

};

}

I’ll talk a little about each step above.

var resourcedAssembliesHash = new Dictionary<string, byte[]> {

{"log4net", Resources.log4net},

{"Microsoft.Practices.ServiceLocation", Resources.Microsoft_Practices_ServiceLocation},

};The first chunk is a static hash of the (key=assembly name, value=byte array of actual assembly). We will use this to load each assembly by name when the runtime requests it.

AppDomain.CurrentDomain.AssemblyResolve += (sender, args) =>

{...

Next we hook into the app domain’s AssemblyResolve event which allows us to customize (given a certain assembly name) where we load the assembly from. Think external web service, some crazy location on disk, database, or in this case a resource file within the executing assembly.

// Get only the name from the fully qualified assembly name (prob a better way to do this EX: AssemblyName.GetAssemblyName(args.Name))

// EX: "log4net, Version=??????, Culture=??????, PublicKeyToken=??????, ProcessorArchitecture=??????" - should return "log4net"

var assemblyName = args.Name.Split(',').First();

Next we figure out the name of the assembly requesting to be loaded. My original implementation used the …Name.Split('’,’).First(); to get the assembly name out of the full assembly name, but as I was writing up this blog post I thought – there must be a better way to do this. So although I am putting the effort to write this out – I’m not feeling like verifying that a possible better way will work (So give this a try and let me know – try using AssemblyName.GetAssemblyName(args.Name) instead).

if (resourcedAssembliesHash.ContainsKey(assemblyName))

{

return Assembly.Load(resourcedAssembliesHash[assemblyName]);

}

Next we check that the assembly name exists if our hash declared initially and if so we load it up…

return null;

};

Otherwise, the assembly being requested to be loaded is not one we know about so we return null to allow the framework to figure it out the usual ways.

Step 3: Finally, I created a post build event that remove the resourced assemblies from the bin\[Debug|Release] folders. This allowed me to have a test project that only had a dependency on the single assembly and verify using it actually works (because it has to load it’s dependencies to work correctly and they didn’t exist on disk).

Please consider.

- You may not have fun if you package some of the same assemblies that your other projects may/will reference (especially if they are different versions).

- Can’t say I have completely wrapped my head around the different problematic use cases related strategy could bring to life. (Use with care)

(That’s Vertigo)

(That’s Vertigo)

![image_thumb9[4] image_thumb9[4]](https://lh4.ggpht.com/-T4MVc7vKolw/TiJKACBtvyI/AAAAAAAAAQU/Pn71WMECKcQ/image_thumb94_thumb.png?imgmax=800)

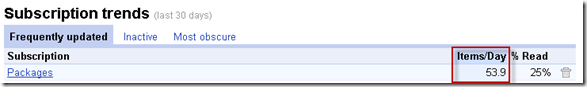

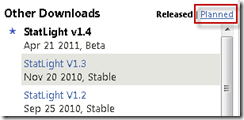

By the way, there's already a project for watching NuGet packages through RSS here: NuGetFeed.org.

With NuGetFeed.org you will be able to follow your favorite NuGet packages through RSS. There's a feature called MyFeed, where you will be able to add a list of packages you want to follow. If you use Google Chrome, there's an extension as well and finally a Visual Studio add-in is also available. Hope you will find NuGetFeed.org useful.